Objectives :

- Learn the fundamentals of messaging queue

- Understand the core features of a messaging queue service

- Learn more about the graceful degradation principle

- Learn how you monitor your messaging queue service

Messaging Queue

The Problem

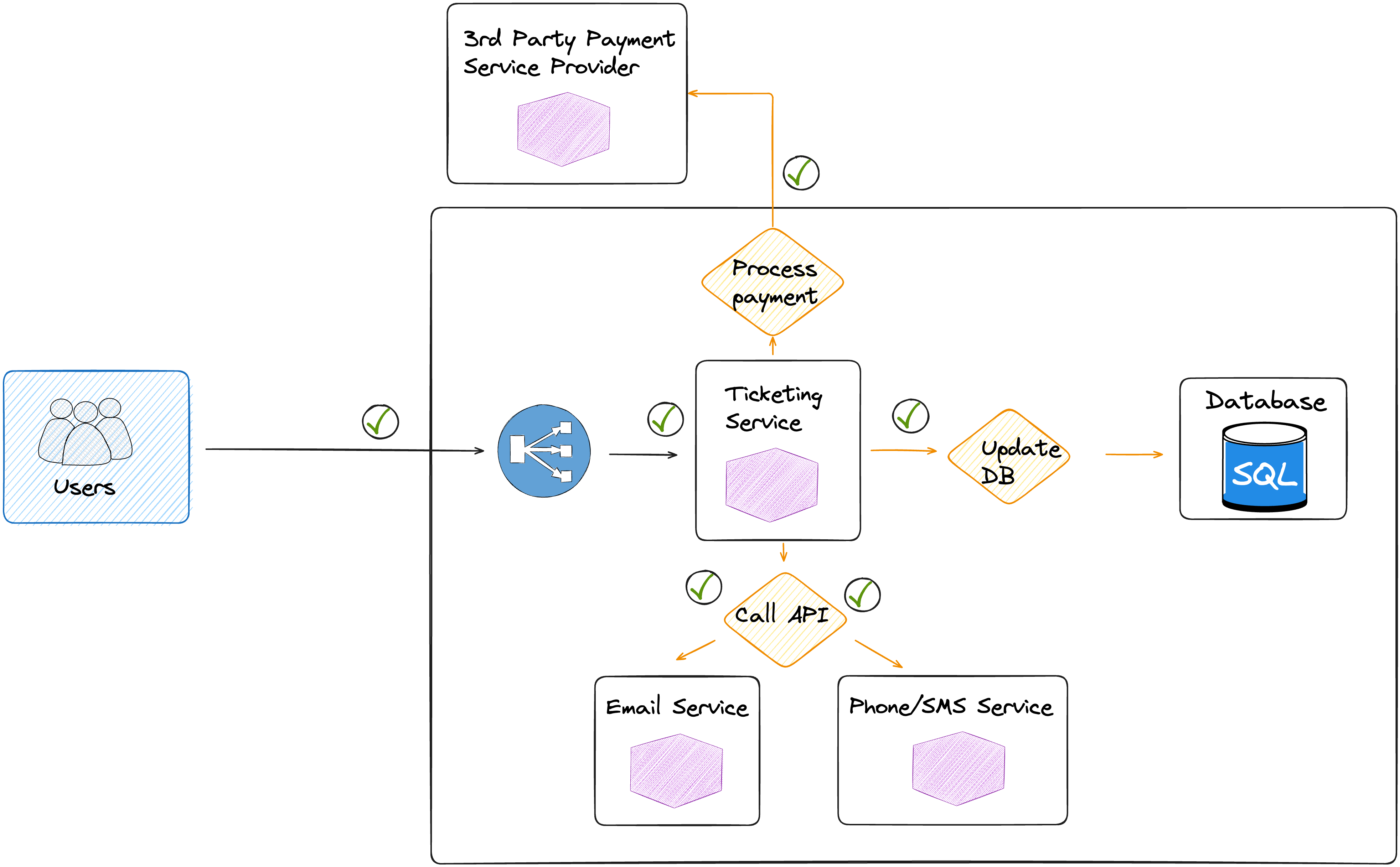

Let's assume your business is about selling tickets for events (concerts, shows, etc ...). Your design architecture is pretty simple:

Users search for events and select a ticket to purchase

The event is sent to a ticketing service

The payment service processes the payment

Once the payment processed, the user and ticket details are updated in the database.

To finish, email service and sms/phone service send notification to the user with the purchase confirmation and the details of the event.

As illustrated in the following diagram

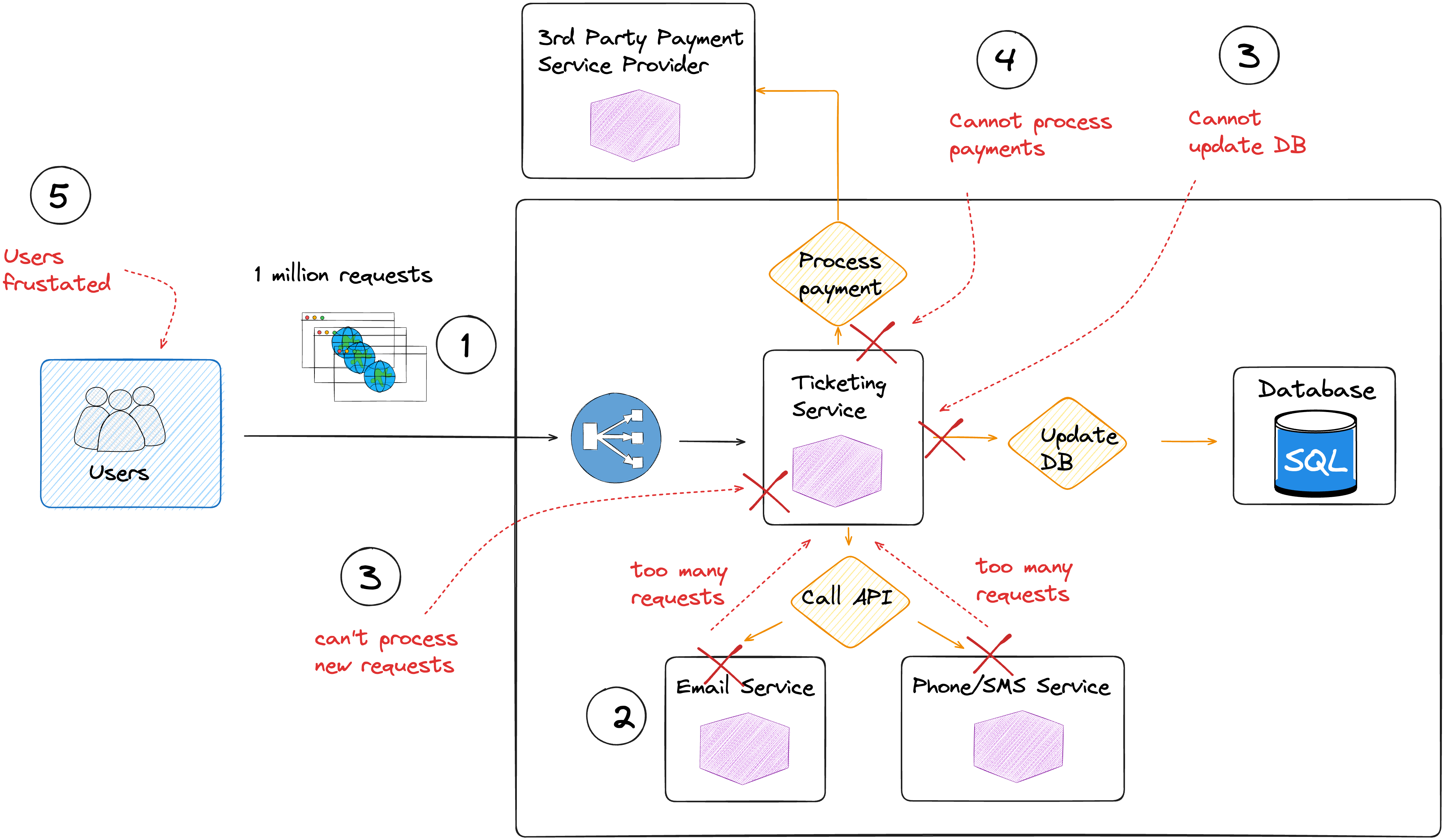

So far so good, until a major event happens, an artist is planning to perform in multiple cities, and the demand is growing exponentially.

There are about 1 million users trying to purchase tickets for the same event, and the system is not able to handle the load.

Email service and phone/sms service are crashing and fail to process the requests from the ticketing service

The ticketing service cannot process new requests

There is no payment processing, with a huge impact and a loss of revenue

Users are frustrated with their experiences (slow response, etc..), and some decide to cancel the purchasing process.

Purchased tickets event and user information cannot be updated in the database

Users don't receive notifications of their purchase, and send you complaints

As illustrated in the following diagram, the bottleneck creates cascading failures.

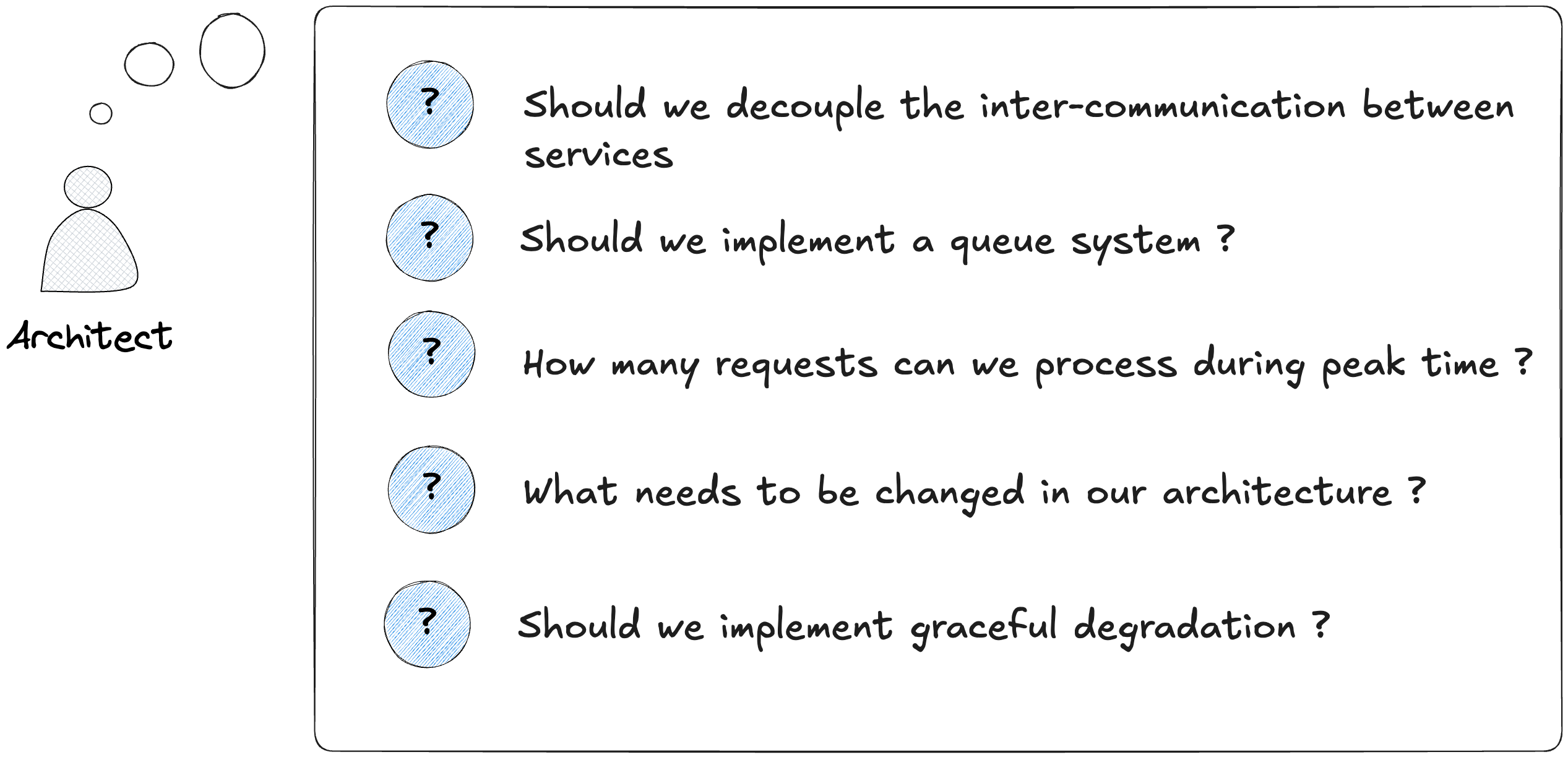

There are few ways to solve this problem, one of them is to use a message queue system.

What's a messaging queue system ?

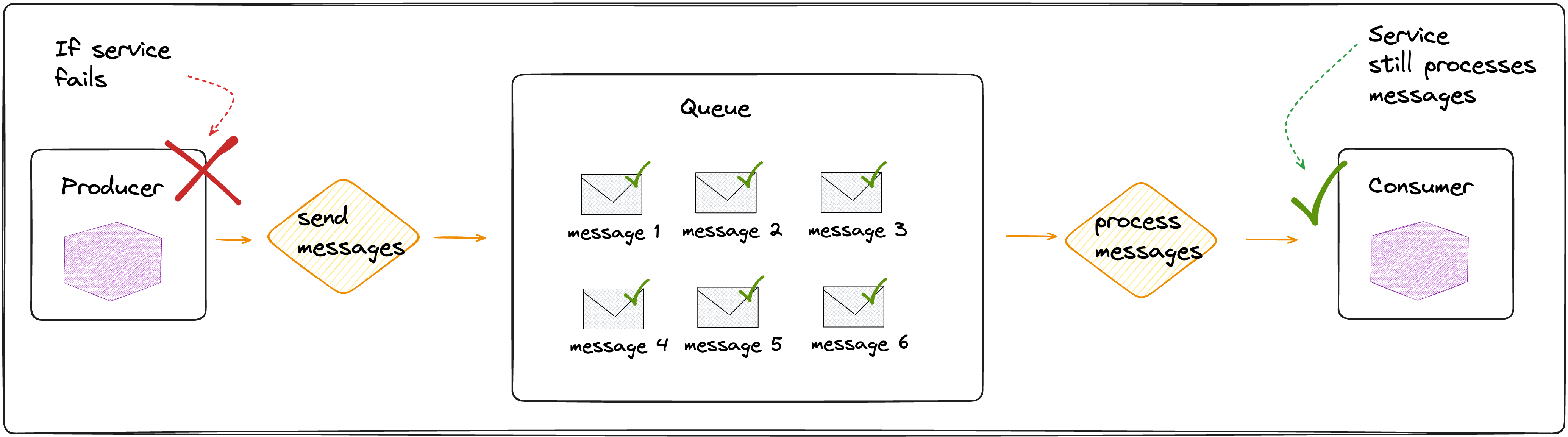

A messaging queue system is a system that allows you to send and receive messages between services. It's a way to decouple the services communications by adding an extra layer to prevent cascade failures when one of the services goes down.

The service/sender instead of using a direct api call to another service, sends the message to a queue (asynchronously), and the service/receiver retrieves (polls) the message from the queue. There are no tight dependencies between both services, allowing more reliability and fault-tolerance in your system.

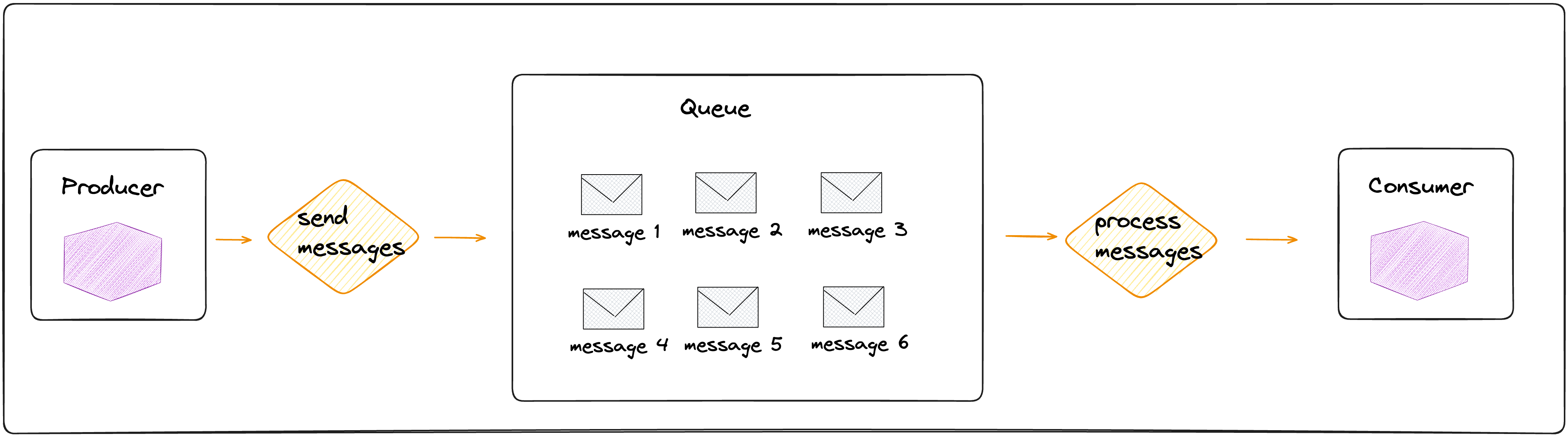

The main components of a messaging queue system are :

Producer : The application that sends the message to the message queue system.

Queue : stores the messages.

Consumer : The application that receives the message from the message queue system.

The main advantages of messaging queue :

The sender (application/producer) doesn't have to wait for a response from another application

The receiver (application/consumer) can process the messages at any time

Multiple consumers can process messages concurrently, messages aren't lost if the service goes down.

Here is an example of message/event (json) that can be sent between sender and receiver :

{

"event": "TICKET_PURCHASED",

"timestamp": "2025-10-06T14:32:00Z",

"data": {

"ticket_id": "TCKT-98423",

"event_id": "EVT-12345",

"event_name": "Jazz World Tour 2025 - Marseille",

"user": {

"user_id": "USR-7821",

"name": "John Doe",

"email": "john.doe@minimalistsa.com"

},

"seat": {

"section": "A",

"row": "5",

"seat_number": "42"

},

"price": {

"amount": 25.00,

"currency": "EUR"

},

"payment": {

"transaction_id": "PAY-982374",

"status": "SUCCESS",

"method": "Credit Card"

}

}

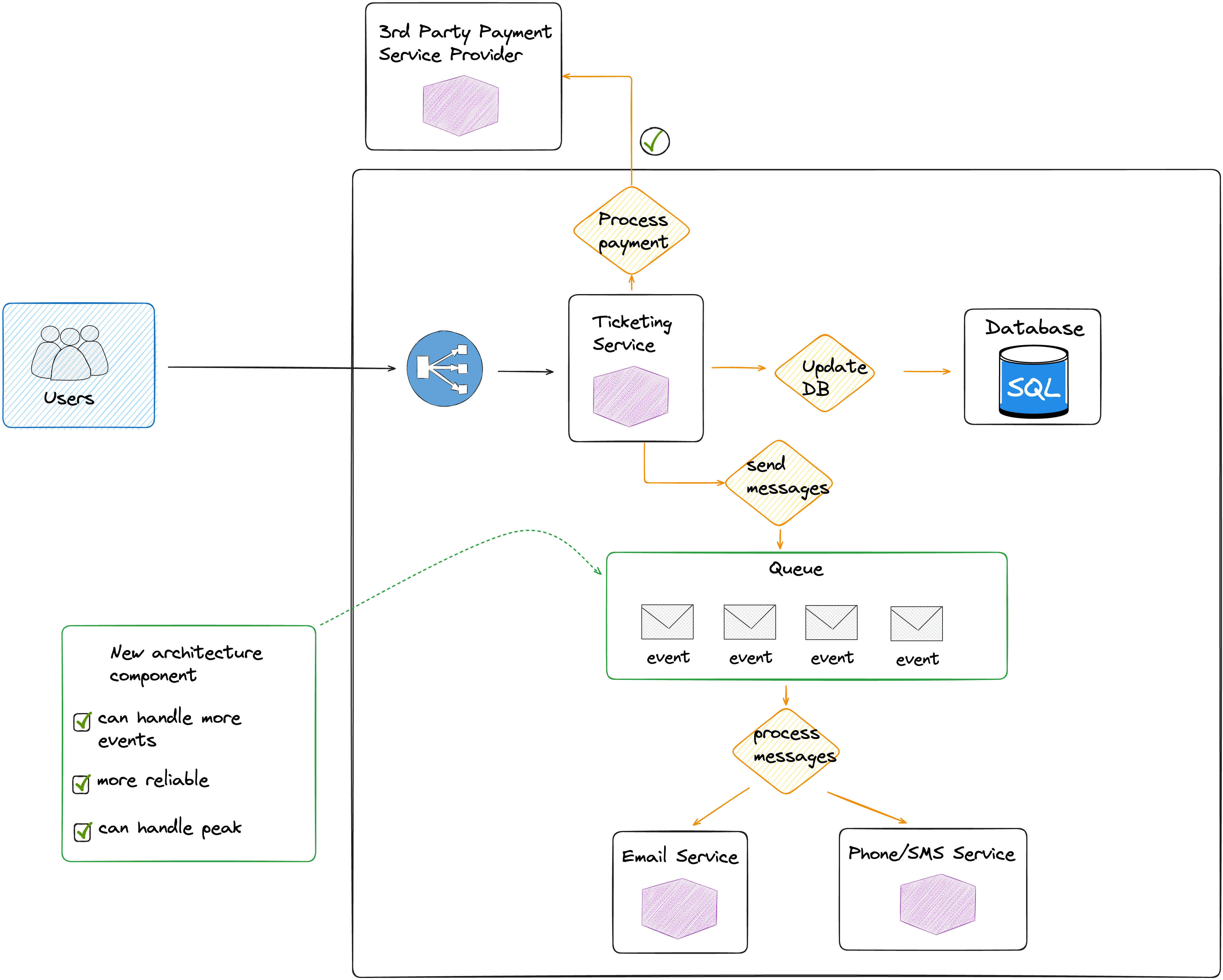

}The new architecture should look like this :

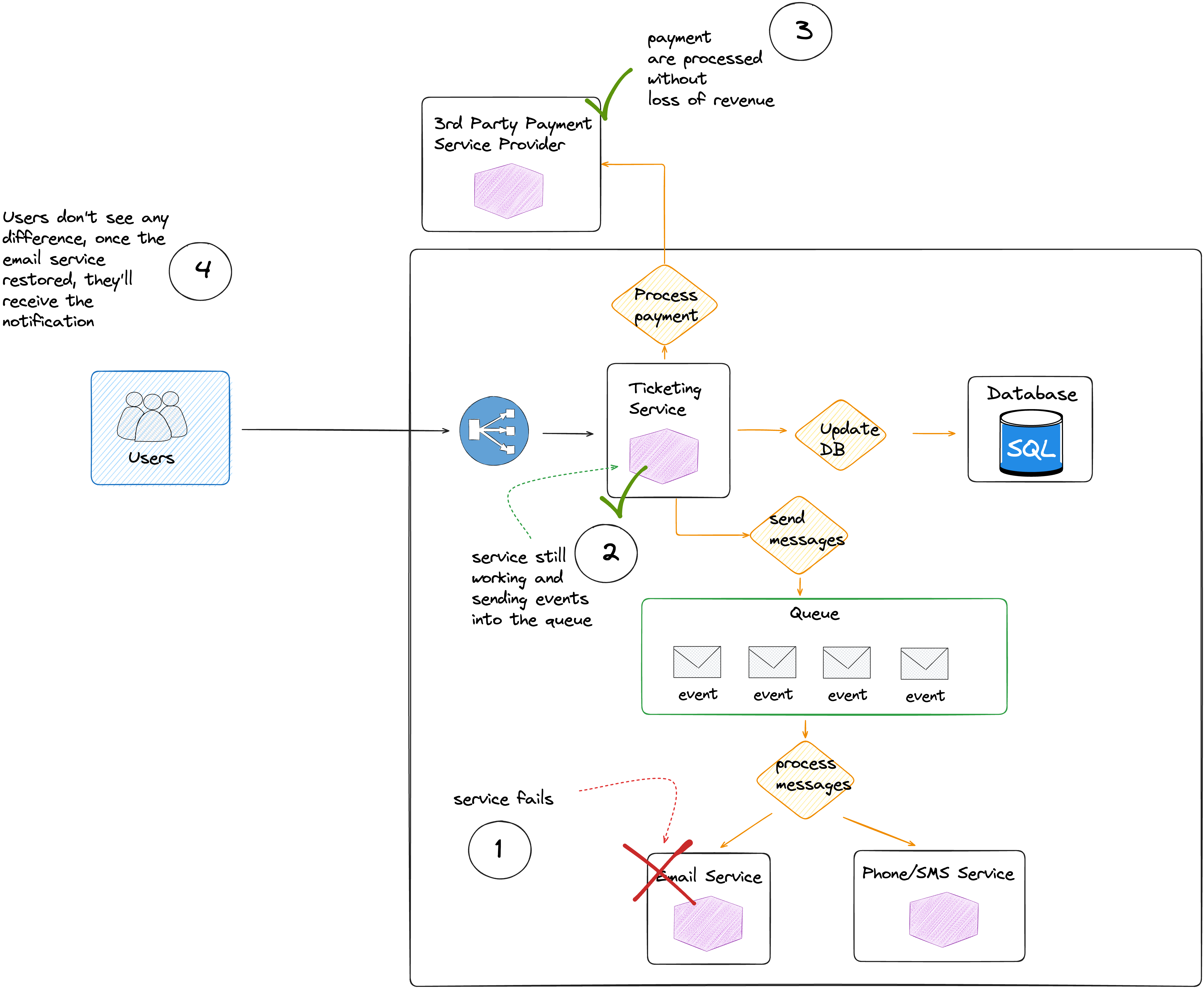

The new architecture component "queue system" should handle more events and more loads.

The email and phone/sms service should process the events/messages at their own pace .

If one of the services (ticketing service, email service, sms/phone service) goes down, the messages are still kept in the queue for later processing.

What's the core features of messaging queue system ?

Messaging queue service often offer core features :

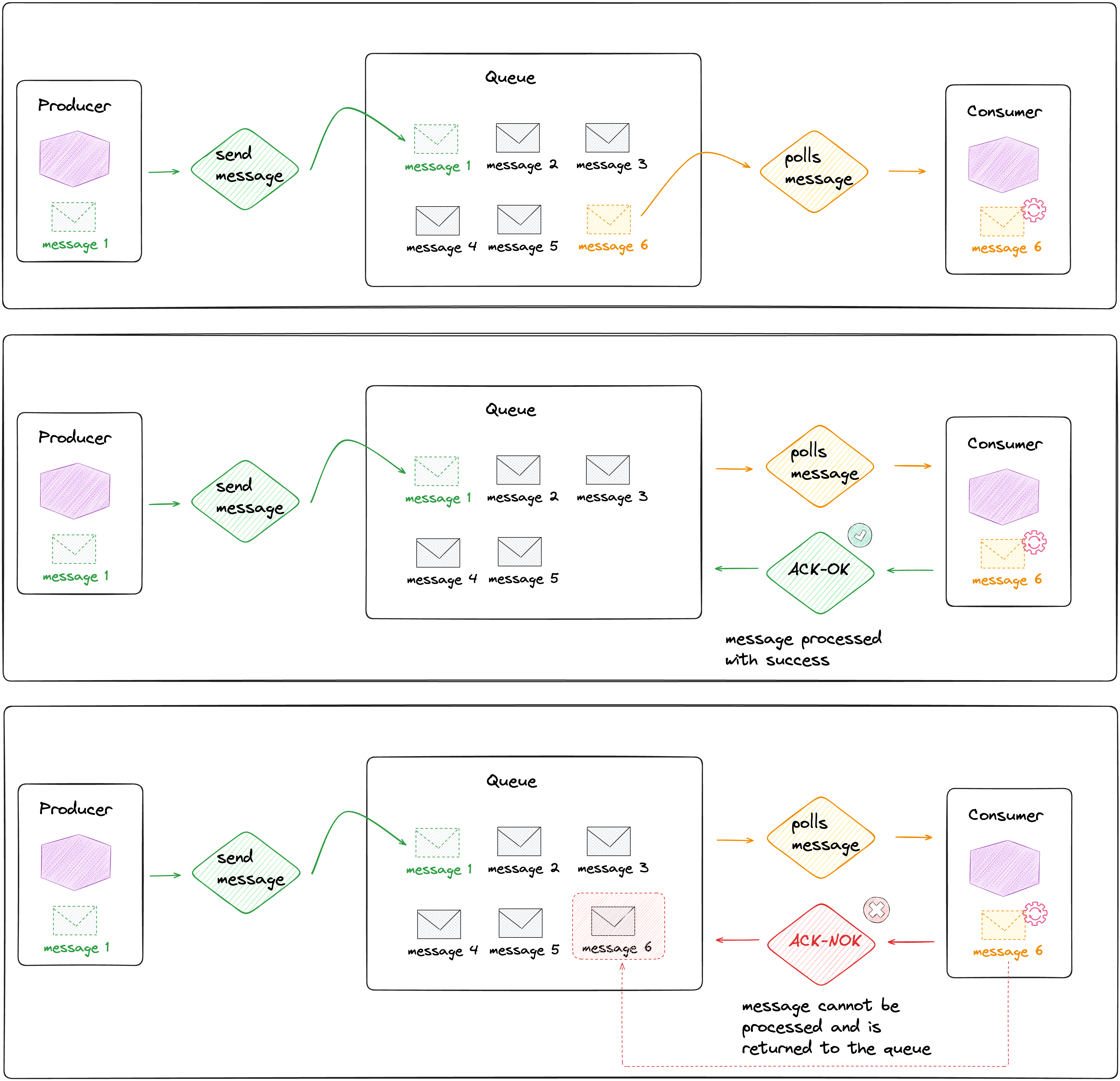

Message Enqueueing / Dequeueing

Acknowledgements (ACK/NACK)

Retries & Backoff

Dead Letter Queue (DLQ)

Priority Queues

Message Ordering

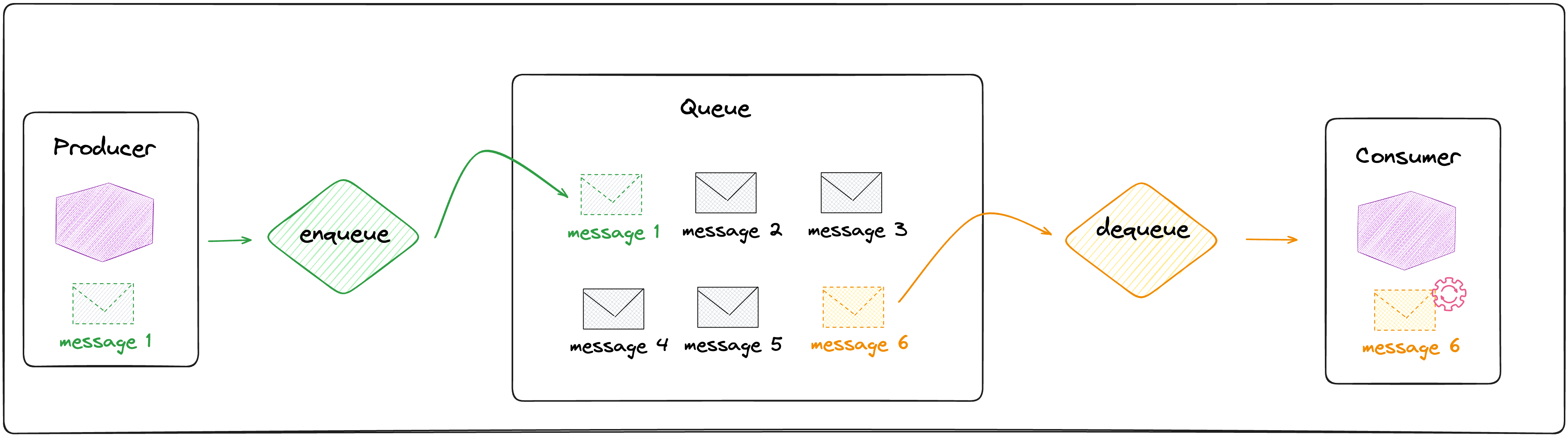

Message Enqueueing / Dequeueing

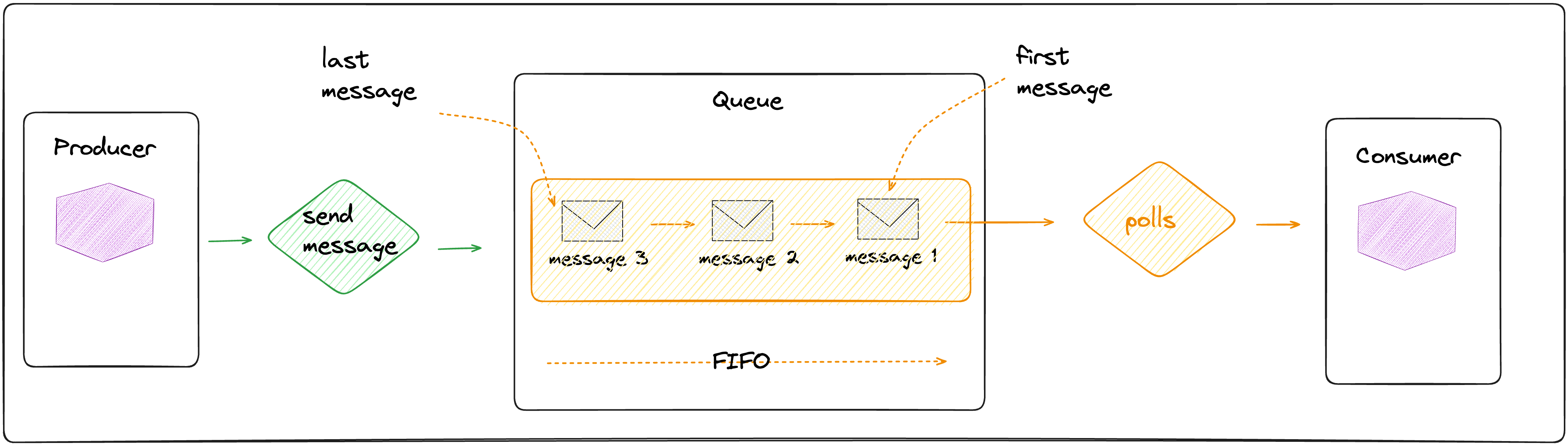

This is the basic functionality of messaging queue system, the producer (sender) pushes an event to the queue, then the consumer (receiver) polls the event from the queue. There are different ways the consumer can retrieve the messages, either on best effort or using FIFO (first in first out) model.

Acknowledgements (ACK/NACK)

ACK : the message has been processed successfully by the consumer

NACK : the message cannot be processed by the consumer and is returned to the queue

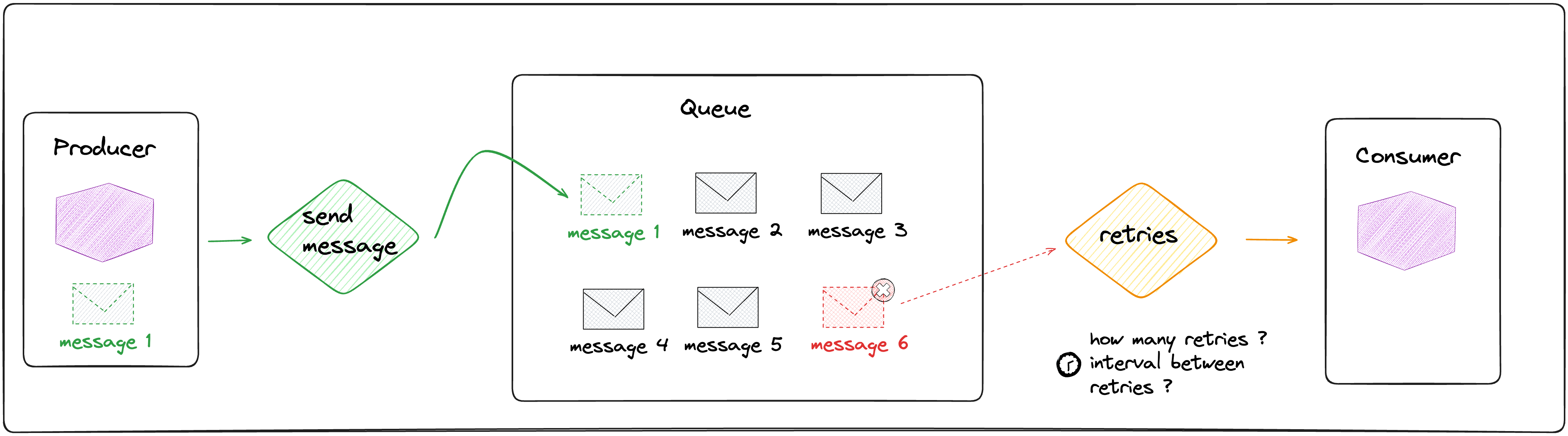

Retries

Failed messages can be processed again by the consumer, you can define retry limit or interval between retries.

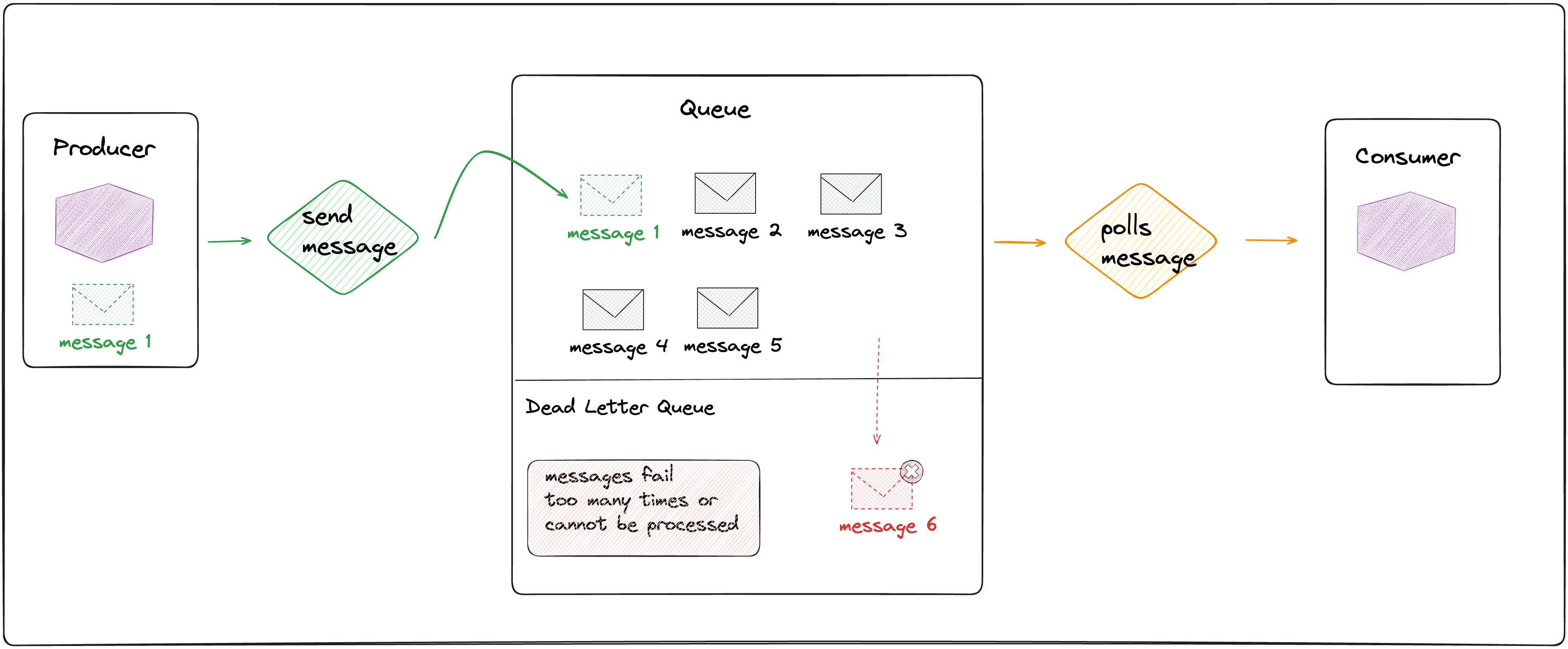

Dead Letter Queue

Messages that failed too many times or cannot be processed are sent to the DLQ (Dead Letter Queue), to reduce the size of the messages to be processed in the queue.

They can be processed manually or more investigation can be done.

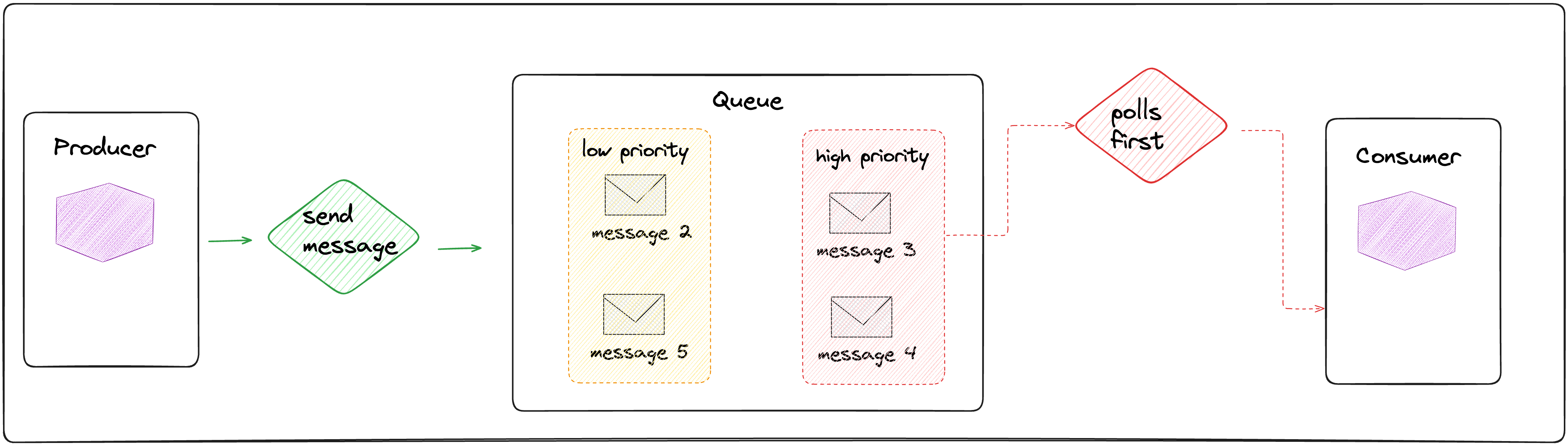

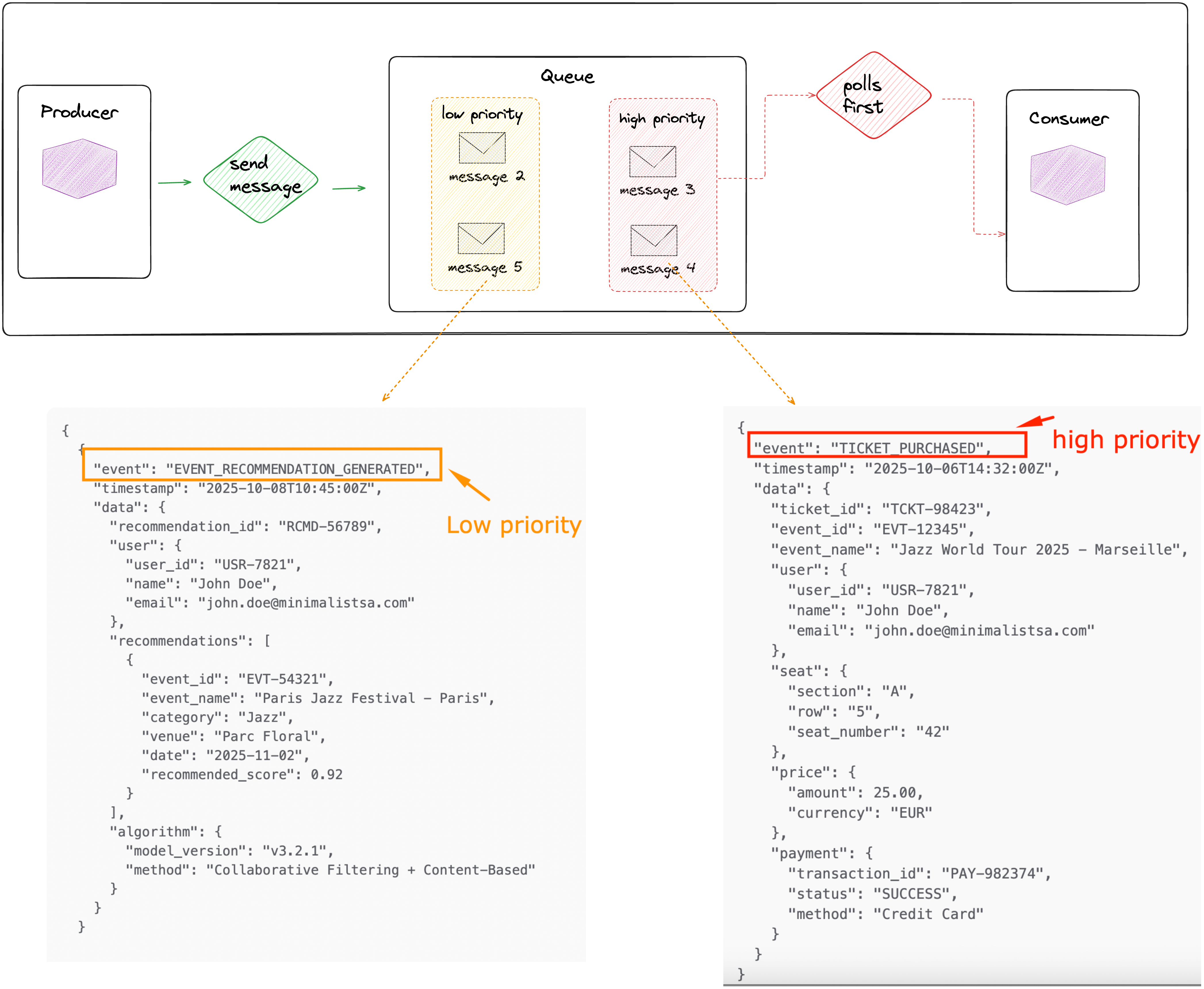

Priority Queue

Priority can be assigned to messages, so that higher priority messages are processed before messages with lower priority.

During peak workload, you probably want to process in priority critical events (orders, payments), and process lower priority events later (product recommendations, etc ..).

Message Ordering

This guarantees that message are processed in the order they arrive (FIFO : First In First Out)

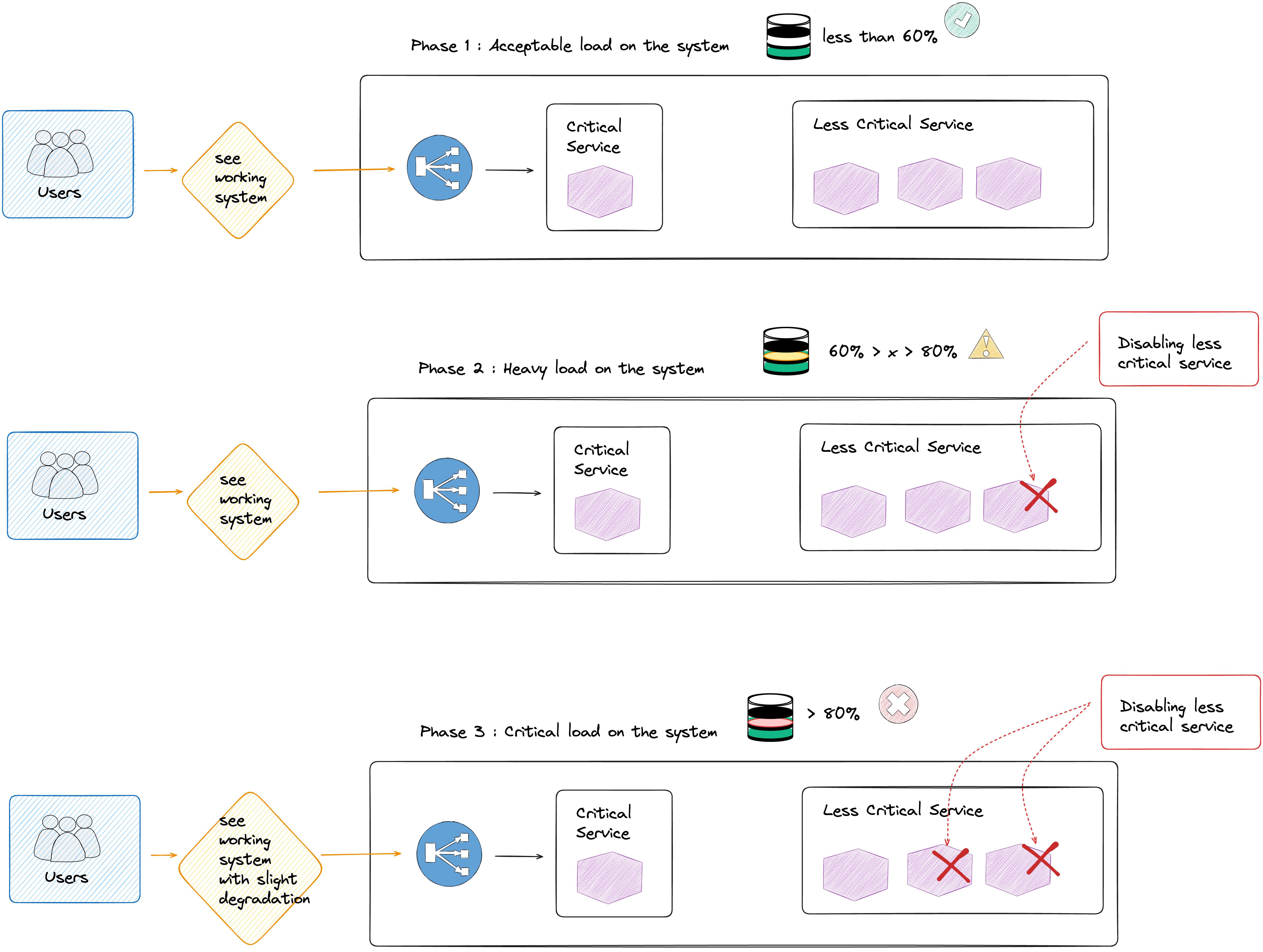

How about the graceful degradation ?

Capacity planning is critical and important, but sometimes things can break with a proportion not fully anticipated, causing cascading failures.

One option to prevent and reduce the risks, is to implement a graceful degradation. The goal is to progressively reject the requests as the load increases or disable temporarily some minor services or features not essential or critical to the user experience. What the user sees is a working system (eventually with a slight degradation), not a full crash.

How can you monitor the activity of your messaging queue service ?

The messaging queue service as all services can also be under pressure, heavy load, it's important to monitor how the service behaves and which metrics can help you understand and alert if threshold are met.

| Monitoring Objectives | Description | Core metrics to collect | Alerts and thresholds |

|---|---|---|---|

| Availability & Health |

Is the broker up and reachable? Are producers and consumers connected? |

broker_up active_connections queue_status (ready/unavailable) |

broker_up == 0 (broker down) active_connections == 0 for > 5 min |

| Performance |

Is the queue processing messages in a timely manner? Are consumers catching up with the producers? |

message_publish_rate message_ack_rate consumer_lag (Kafka) message_latency_p95 / p99 |

consumer_lag > 1000 messages for > 5m p95_latency > 500ms |

| Resources & Capacity |

Is the broker running out of memory or disk space? Are queues growing too large? |

memory_used / memory_available disk_usage queue_length |

memory > 85% for 5m disk_usage > 80% queue_length > 10k |

| Errors & Faults |

Are there any failed message deliveries or rejected messages? Are dead-letter queues (DLQs) growing? |

message_error_rate dlq_message_count |

error_rate > 1% over 5m DLQ count > 100 |

| Security & Access |

Monitor unauthorized access, privilege changes, or suspicious traffic. |

failed_auth_count unauthorized_connection_attempts |

failed_auth_count > 5/min suspicious IPs detected |

| Replication & Cluster State |

Ensure replicas are in sync and partitions healthy. |

partition_under_replicated leader_election_rate |

under_replicated_partitions > 0 leader_election_rate > 1/min |

How many requests can we process during peak time?

Capacity planning should help you determine how many messages can be handled during peak time. The following should help you plan for peak workloads

How many messages/s can be ingested ?

What's the size of each message ?

How long the message remain in the queue ?

How many consumers can read simultaneously ?

Here is a brief comparison of the available messaging queue

| Amazon SQS | RabbitMQ | Apache Kafka |

|---|---|---|

| Managed distributed queue (AWS) | Traditional message broker | Distributed log streaming platform |

| Up to 3,000–5,000 msg/sec per queue (standard), can scale horizontally | Typically 20–50k msg/sec per node; depends on hardware | Easily 1M+ msg/sec per cluster, horizontally scalable |

You can also perform stress tests on your messaging queue system with different loads with tools like :

Real-World Use Cases for Message Queue Systems

| Industry / Context | Use Case / Scenario | Description / Impact | Sources |

|---|---|---|---|

| E-Commerce | Order Processing | High-volume orders handled asynchronously, ensuring smooth checkout and backend processing. | More details |

| Fintech / Payment Systems | Transaction Processing | Payment requests queued for asynchronous processing and retries, ensuring reliability during spikes. | More details |

| Healthcare | Patient Appointments & Records | Patient scheduling and data handling queued asynchronously, preventing double-bookings or delays. | More details |

| Email / Marketing Platforms | Large-scale Email Dispatch | Email sending queued to handle high volume and ensure timely delivery. | More details |

| IoT / Sensor Networks | Device Data Ingestion | Sensor data queued for async processing, ensuring scalability and reliability. | More details |

| Microservices | Inter-Service Communication | Messages queued to decouple services, enabling asynchronous and reliable communication. | More details |