Objectives :

- Learn the difference between vertical and horizontal scaling

Vertical vs Horizontal scaling

The Problem

One of the main problem you encounter for any application running on your cloud infrastructure is how you handle the traffic. If you know how many users on average will send requests to your application, and if that number does not increase, it's easier to plan how many resources will be needed.

On the other hand, if you don't know in advance how the traffic will evolve overtime and if you have no idea how many users will send requests to your application, it's important to understand which strategy will be the best to implement. There are 2 main strategies that we will discuss in this section: Vertical scaling and Horizontal scaling.

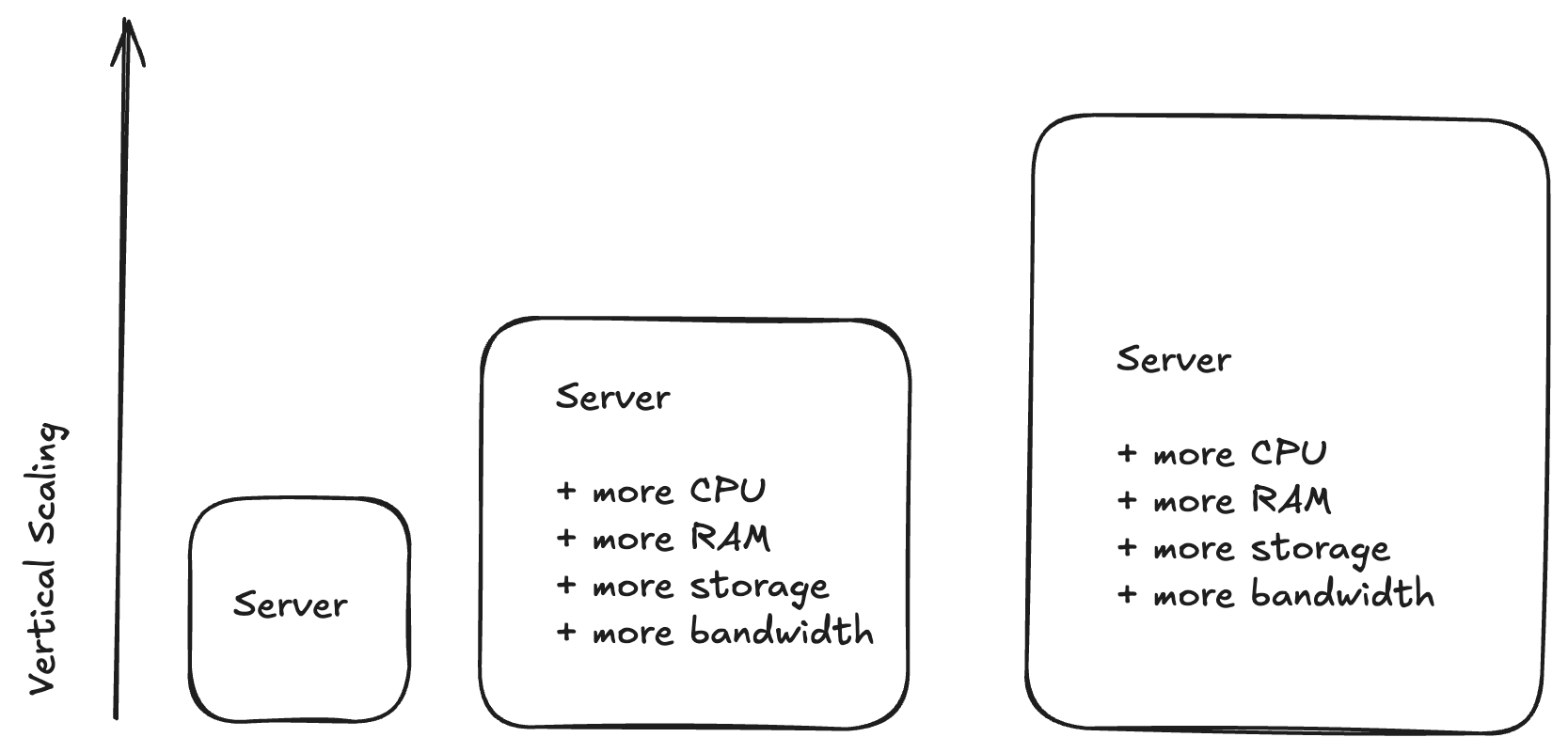

Vertical scaling

In a vertical scaling strategy you use the same server, but you add more capacity (more CPU, more RAM, more network bandwidth, more storage), to improve the performance of your application.

Benefits of using vertical scaling

Increasing the computing power : by adding more hardware resources (memory, cpu, storage or network), you improve the performance of your server and you can handle more workloads. It's true for resource intensive applications

Easier resource management : you don't have to manage distributed resources, you only manage a single server, it decreases the complexity of managing your infrastructure.

Limits of using vertical scaling

Single point of failure: One the main limit of using vertical scaling is the risk of having a SPOF (Single Point of Failure), any issue or problem on your server will cause downtime and interrupt your application.

Hardware complexity: Upgrading hardware might add an extra layer of complexity and usually you have to plan maintenance window more often, causing services interruption and limiting your application availability to users.

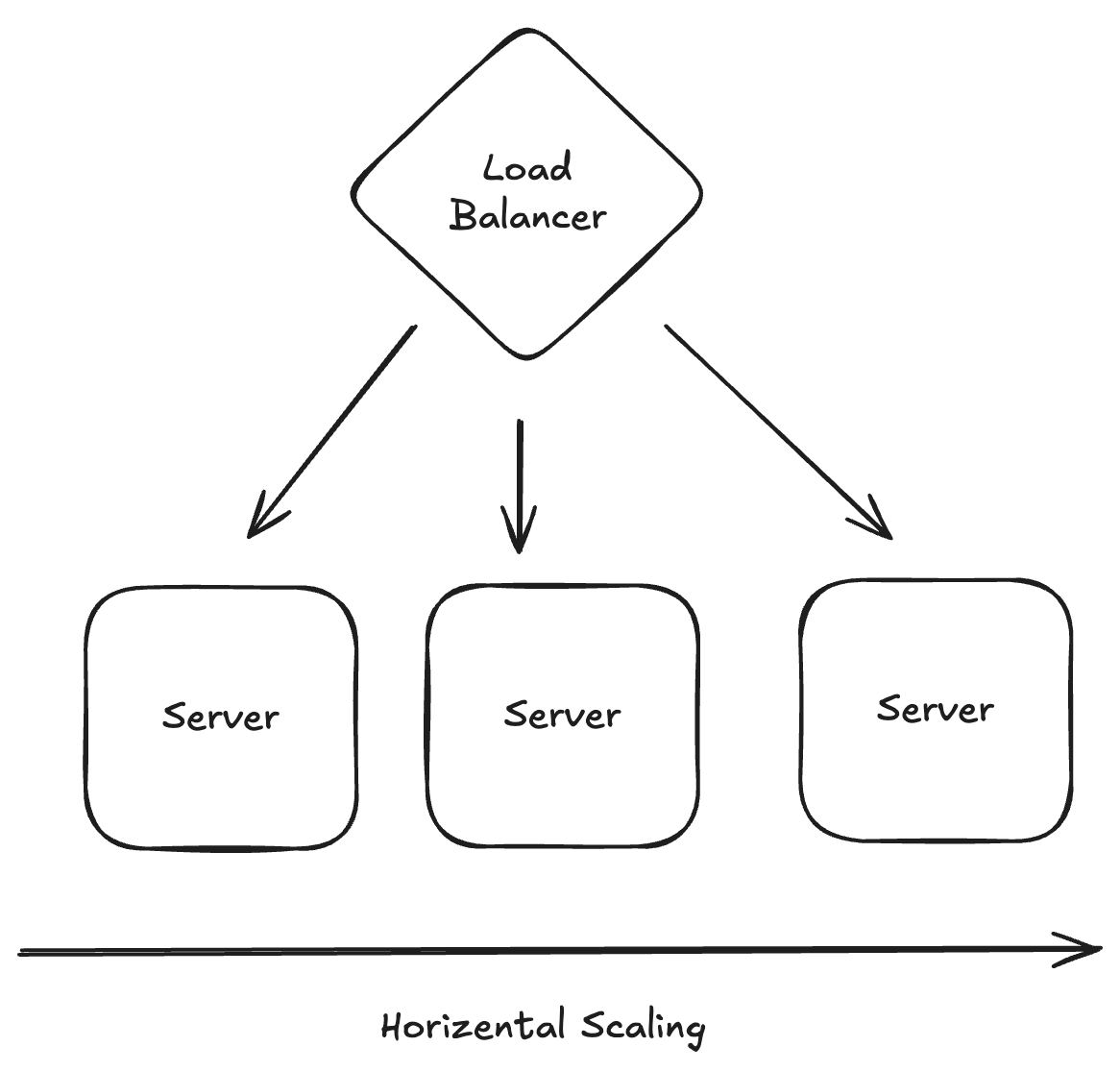

Horizontal scaling

In a horizontal scaling strategy you add more servers to handle more traffic, more requests. Usually you use a load balancer in front of your servers to distribute the traffic evenly

Benefits of using horizontal scaling

Improving load distribution : distributing the workload across multiple servers, improve the performance and make your application more reliable and resilient. Traffic distribution is controlled by a load balancer, ensuring an efficient resource utilization.

On-demand workload : one of the key benefits is the ability to handle workloads efficiently, you use only the resources that you need at a given time, helping you lower your resources' consumption bills.

Fault-Tolerant: handling your workload across multiple servers, improve resiliency, and make your application more reliable if failures or any incident happens. The traffic is simply redirected to healthier servers.

Limits of using horizontal scaling

Configuration complexity: as your horizontal scaling depends essentially on load balancer, it adds an extra layer of complexity on the configuration of your load balancer to handle appropriately the traffic and requests.

Data consistency: one of the main complexity is keeping the data consistent across multiple servers. It adds another layer and can be challenging to manage, you have to ensure that the data is synchronized between multiple servers. Depending on your workload, you may have to prioritize between "consistency" and "availability". It is the next section we will introduce the CAP theorem principle.