Objectives :

- Learn how to distribute workloads with load balancers

Workload Distribution

The Problem

Most modern web applications are designed to handle from few thousand to hundreds thousands requests, and even millions of requests. The challenge is often to ensure that you can scale efficiently to match the incoming traffic and that your workload is distributed evenly between your servers. Load Balancers are usually the answer and the key component to handle the requests.

Load Balancers come with core features to route the traffic

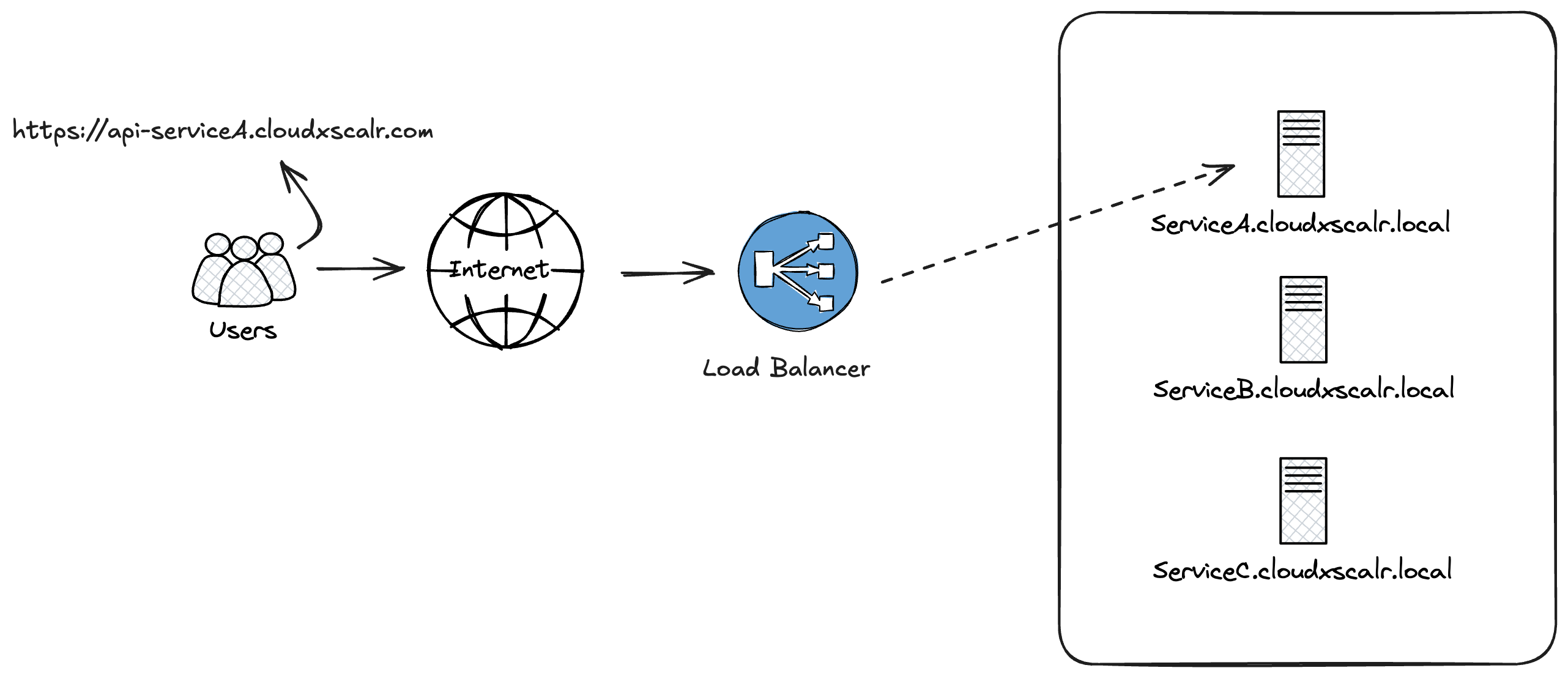

Host-based routing

The traffic is sent based on the requested hostname or service. In the following example , users request URL https://api-serviceA.cloudxscalr.com, which when it reaches the load balancer is redirected to the server or service "serviceA.cloudxscalr.local"

Benefits of using host-based routing

Simple architecture to implement: It's pretty simple to implement

Services isolation: Each service can have its own subdomain, make it easier for team to manage their own scope, and it makes development testing easier to manage

Limits of using host-based routing

Domain registration: Each service may have its own subdomain, meaning you have to register new domain for new service. Depending on the number of services, it might become overwhelming to manage.

Path-based routing

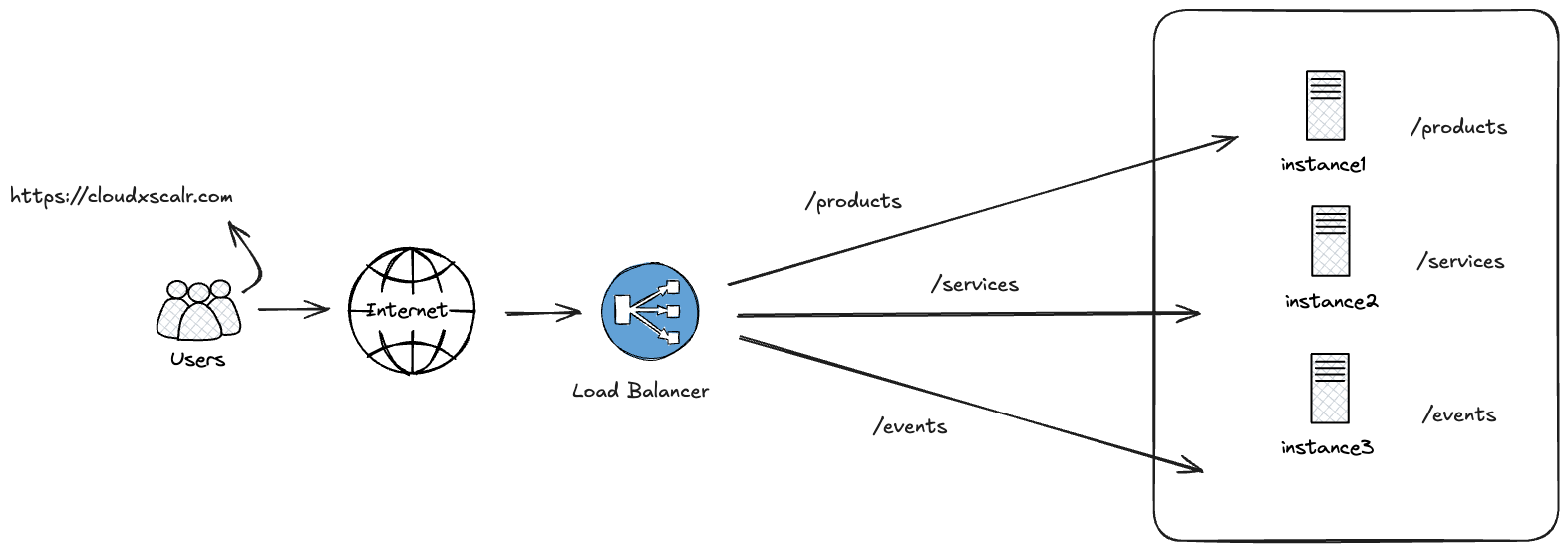

The traffic is routed based on the URL path;

Example :

When a user enters https://cloudxscalr.com/products, the load balancer redirects the traffic to instance1;

When a user enters https://cloudxscalr.com/services, the load balancer redirects the traffic to instance2;

Benefits of using path-based routing

Simple architecture : It's make your architecture easier to manage, multiple services (or apis) are under one single hostname; you have one single entry-point, preventing you from having multiple subdomains name to manage

Limits of using path-based routing

Change management process: Having a central accessing point, will require good change management process when upgrading api version or for development testing

Cost management: The costs might increase overtime if you have huge traffic.

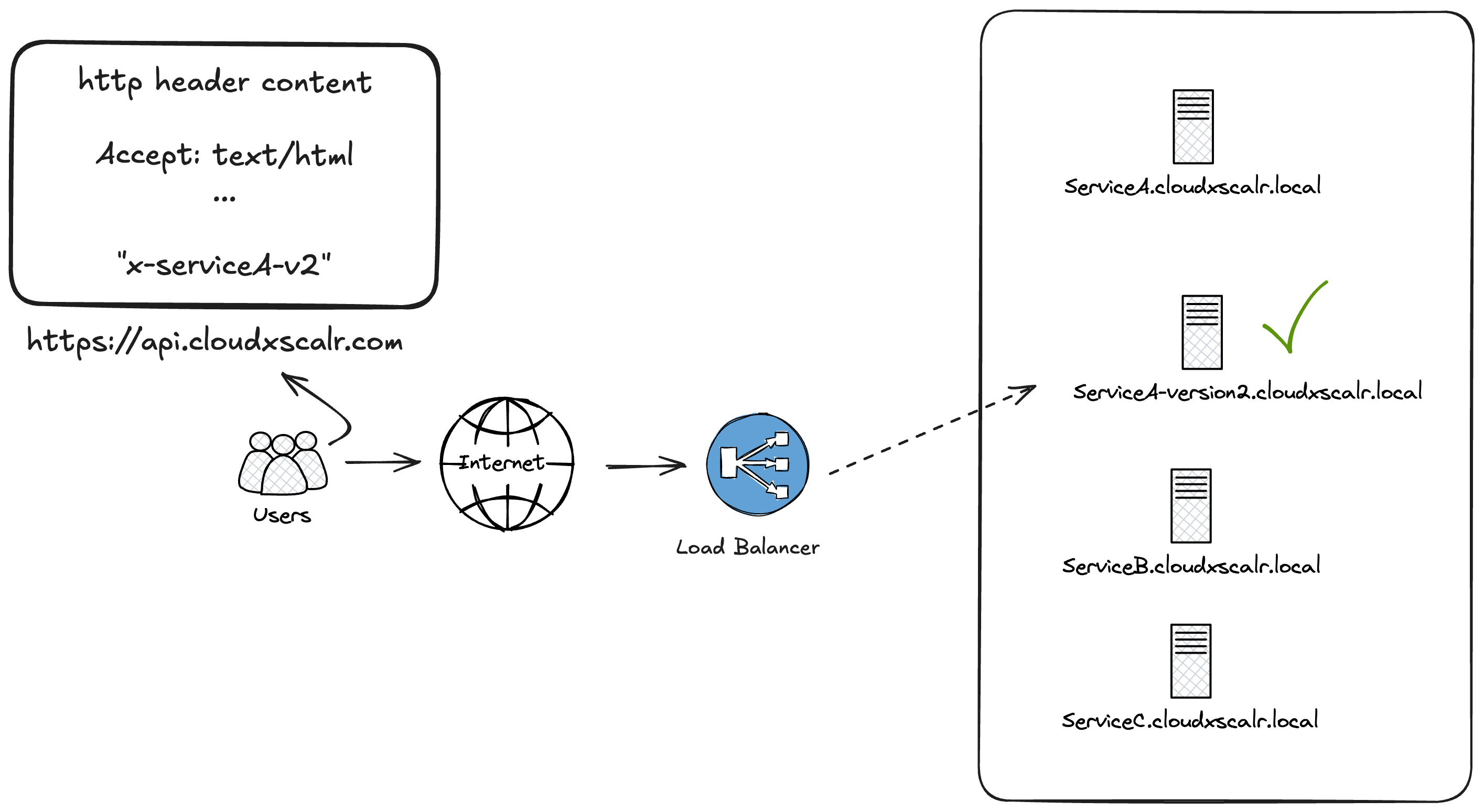

HTP header routing

The request is redirected based on the content of the HTTP header in the HTTP request. In the following example, the HTTP request contains in the header "x-serviceA-v2" which is redirected to the API/service serviceA-version2.

Benefits of using HTTP header routing

Flexible configuration : The implementation is easier, and it's useful for A/B testing or versioning.

Limits of using HTTP header routing

Change management process: You manage the client side (origin of the HTTP request) and can easily make some updates

Load Balancer core features

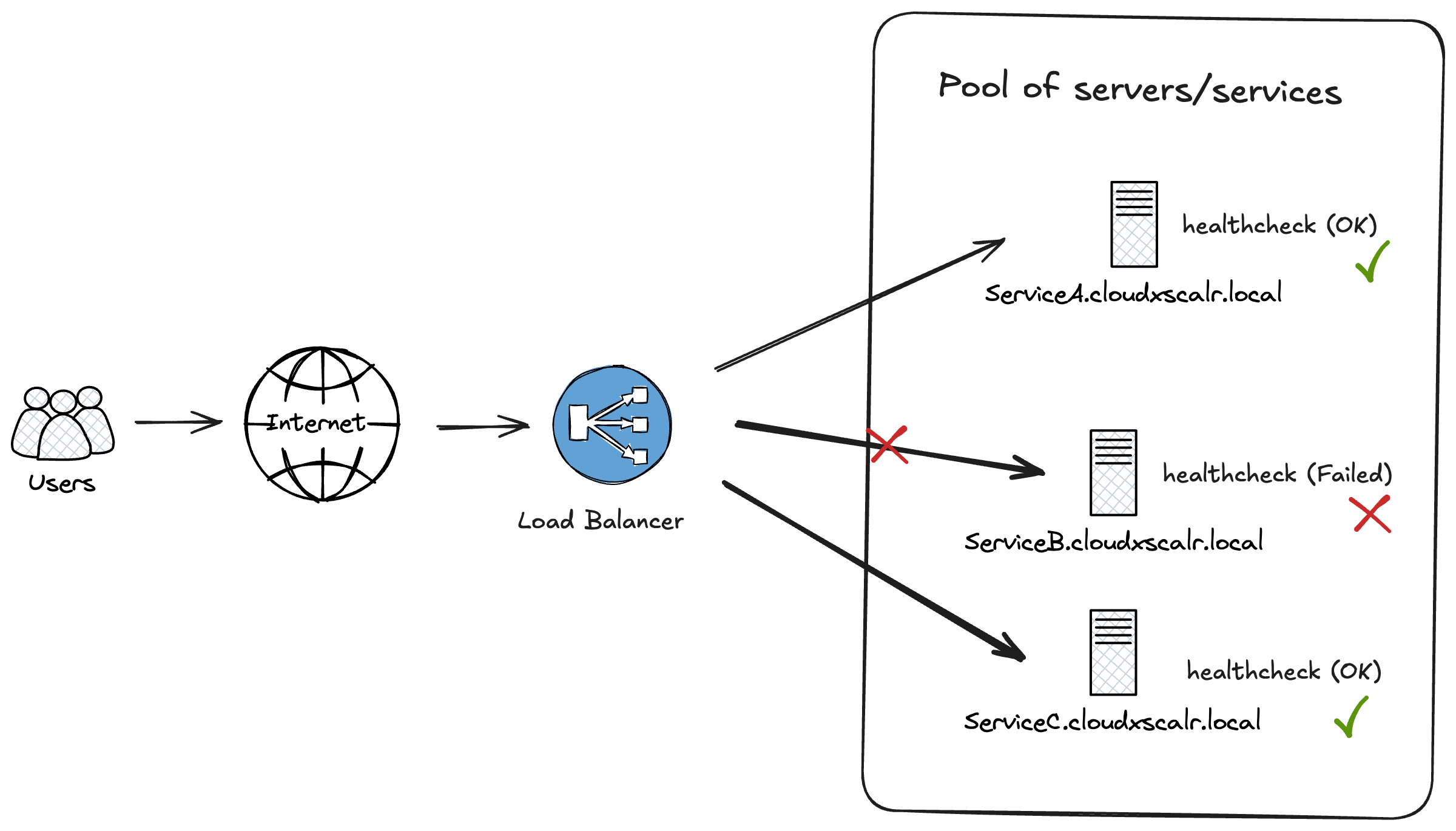

Healthchecks : This is probably the most important feature of a load balancer, by defining health check interval, you can control whether servers or services receiving the request are online of offline; if the server/service fails to respond to the health check, the load balancer remove the resource from the pool of server to send requests.

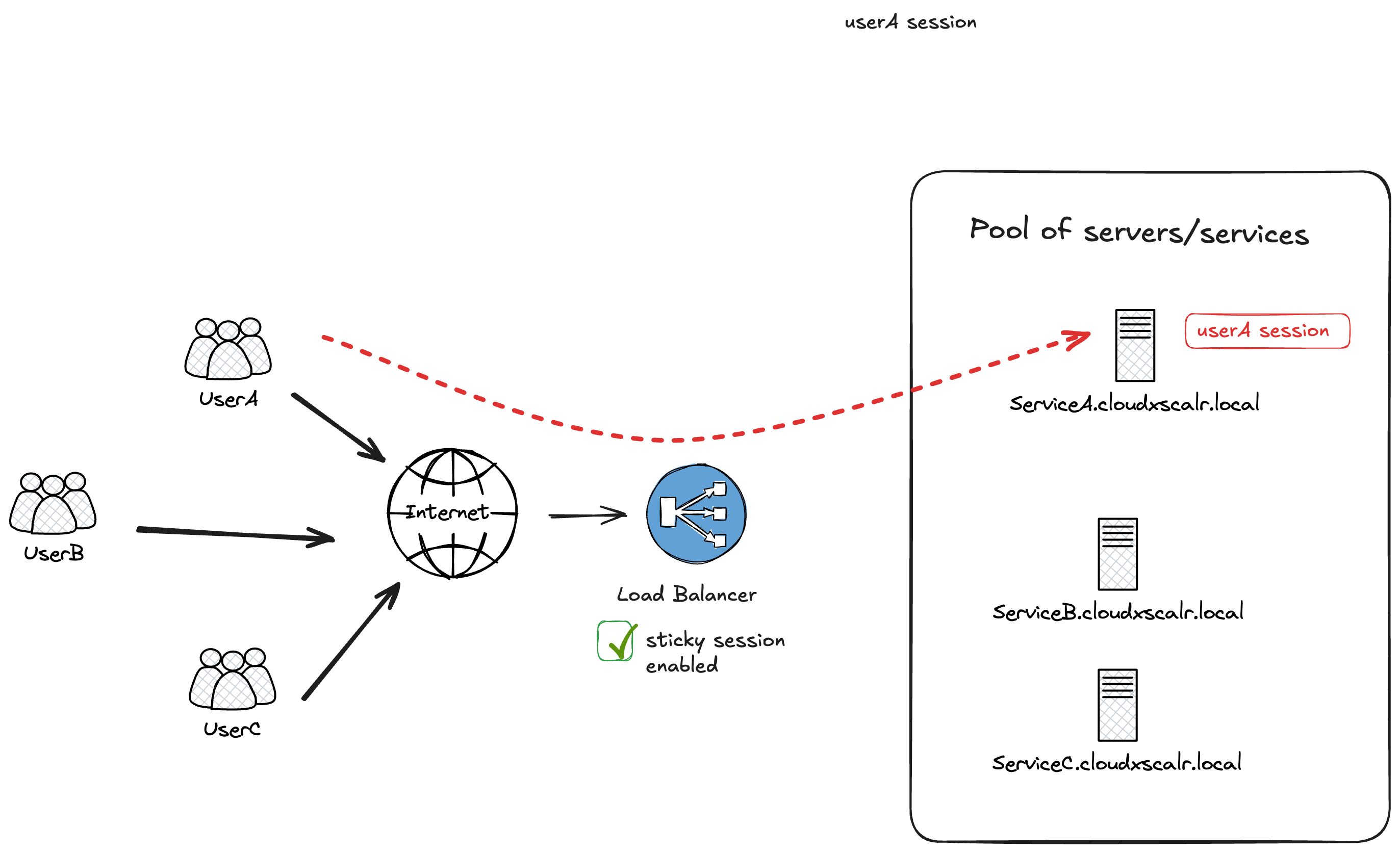

Sticky sessions : Usually, load balancers will distribute traffic across different servers, a new session is opened on different server. In some use case you may want to keep the user session open to a specific server without any change. The option "sticky session" allows you to keep a user session on the same server

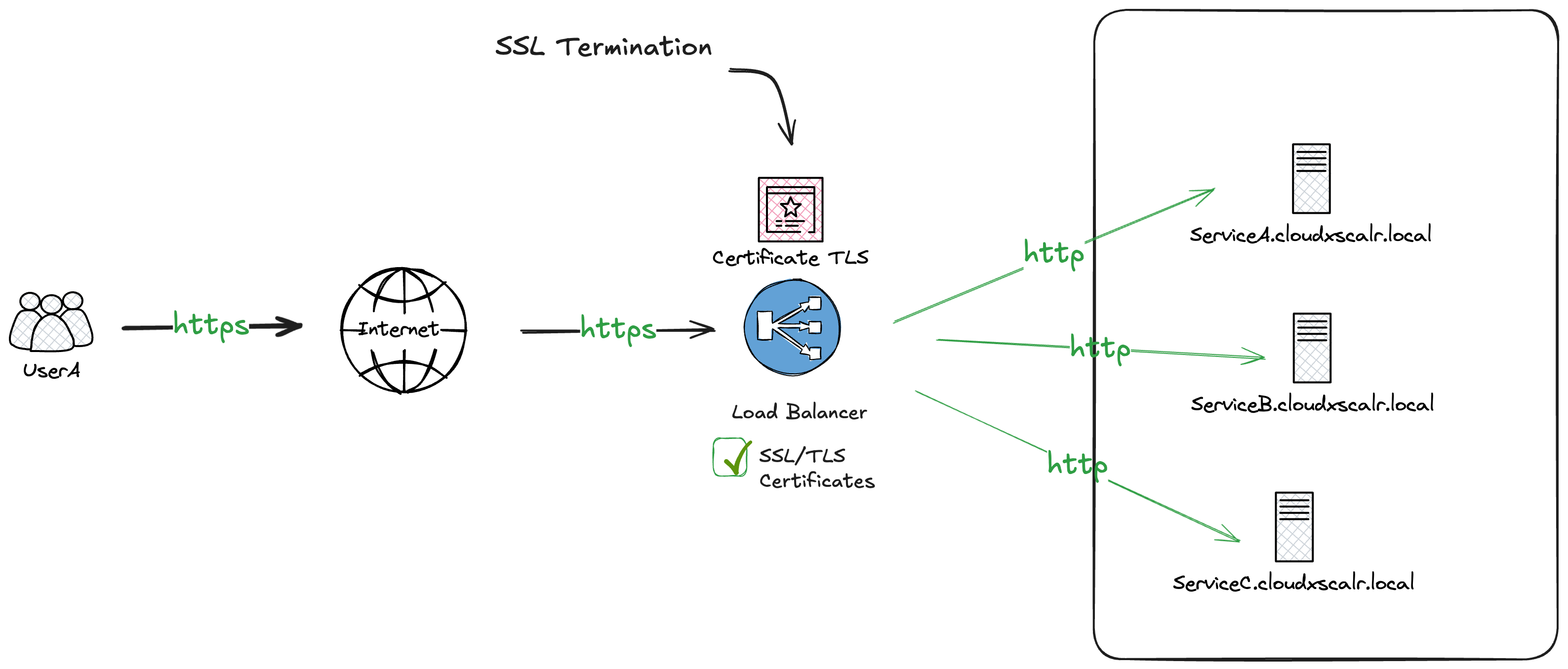

SSL Termination: To prevent third party to access the content of your request, usually you can upload of TLS/SSL Certificate on the load balancer. Users can send secure https request to your load balancer. The secure session is terminated on the load balancer, then http request are sent the target servers

Logging: One of the important aspect is to log requests, the goal is to keep a trace of every user request, for security and audit.

Redirect: You can have the option to redirect incoming request, example redirect HTTP (80) to HTTPS (443), forcing secure traffic from user/client.