Objectives :

- Learn the fundamentals of caching

Caching

The Problem

You've just deployed your application, and all the data are persisted onto a database.

A few users use your application and they feel pretty much happy with their experiences so far.

But a few months later, there is growing demand, and more and more users register, and things start to get slow,

Some users are complaining about their experiences.

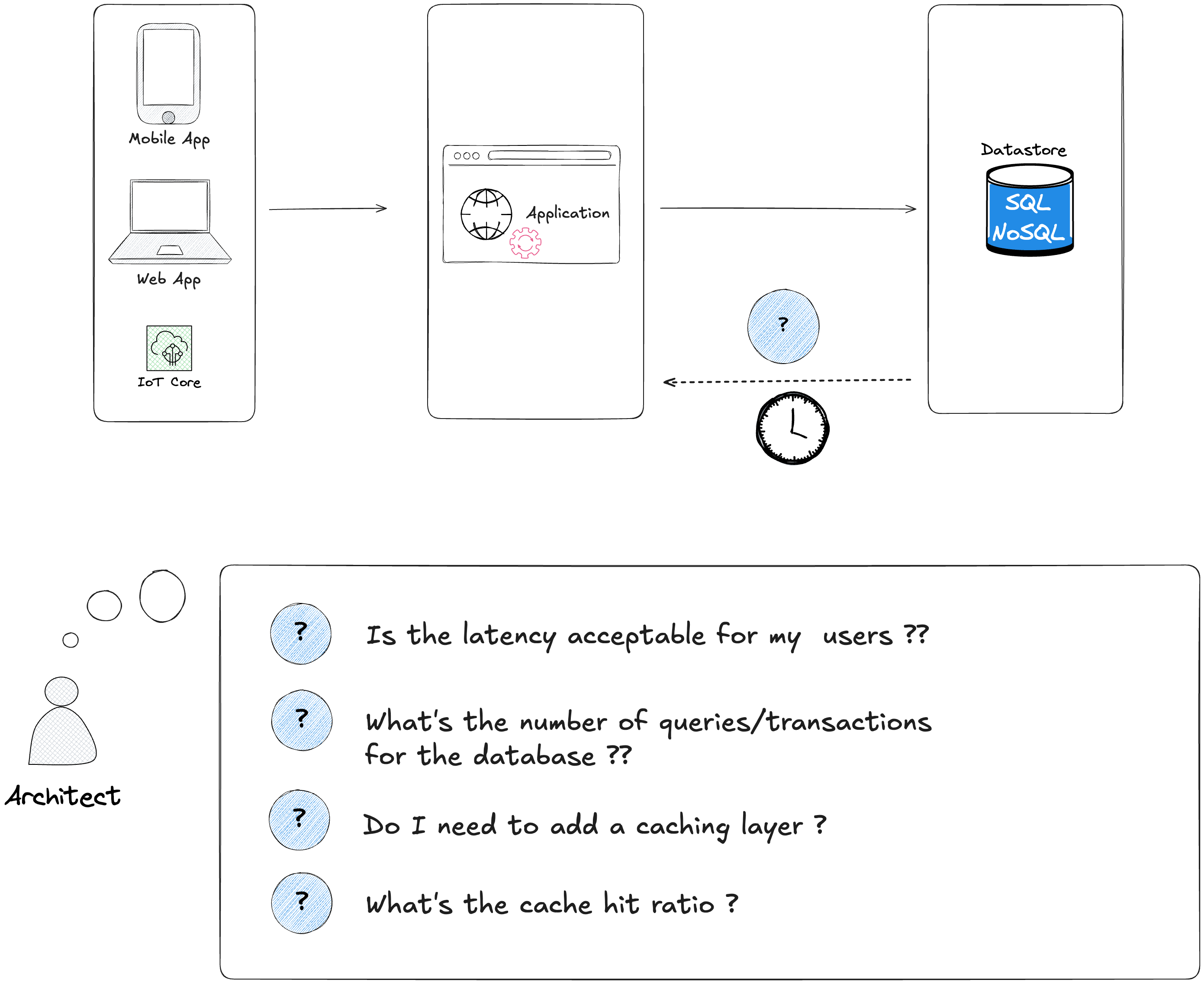

As illustrated in the following diagram, there are few questions you can ask yourself:

Answering these questions should help you make tradeoffs and assess whether or not there is a need to add a caching layer, but most importantly if your users are happy about their experience while using your application.

What's a caching system ?

During normal operations and workflow, your application send queries (read/write) to a database. If the demand or the workload of your application increases exponentially (more and more users, etc ..), the latency will increase.

Your users will get slower responses and this impacts significantly their experiences. Caching system solves the problem, by adding an extra layer to speed up the responses.

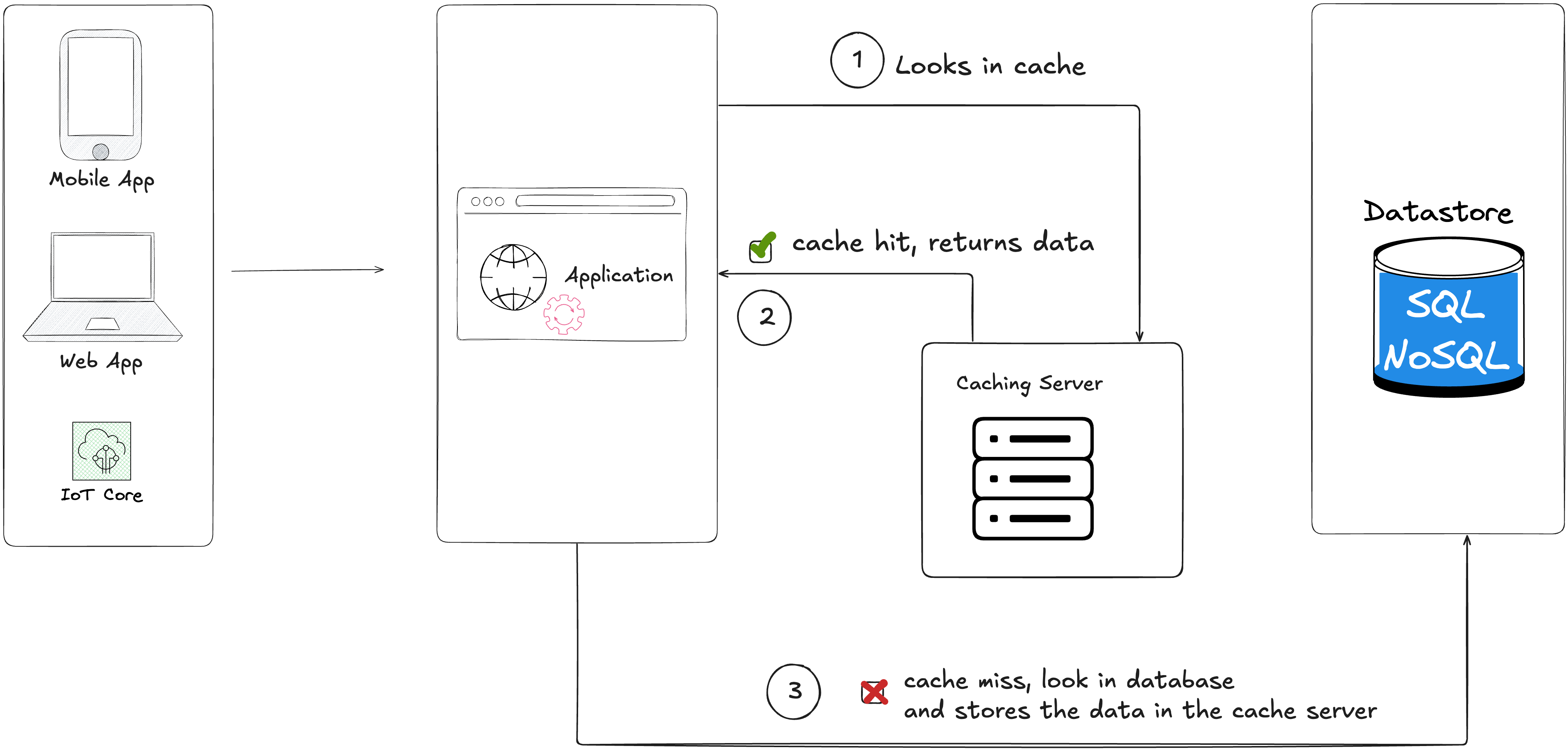

When implementing a caching system between your application and a database, these operations happen :

The application first checks in the cache if there is an equivalent response of the query saved

If there is no query already saved in the caching system, it's a cache miss the application sends the request to the database, gets the response and saves it to the caching system

If there is a response already saved, it's a cache hit, it's a faster response.

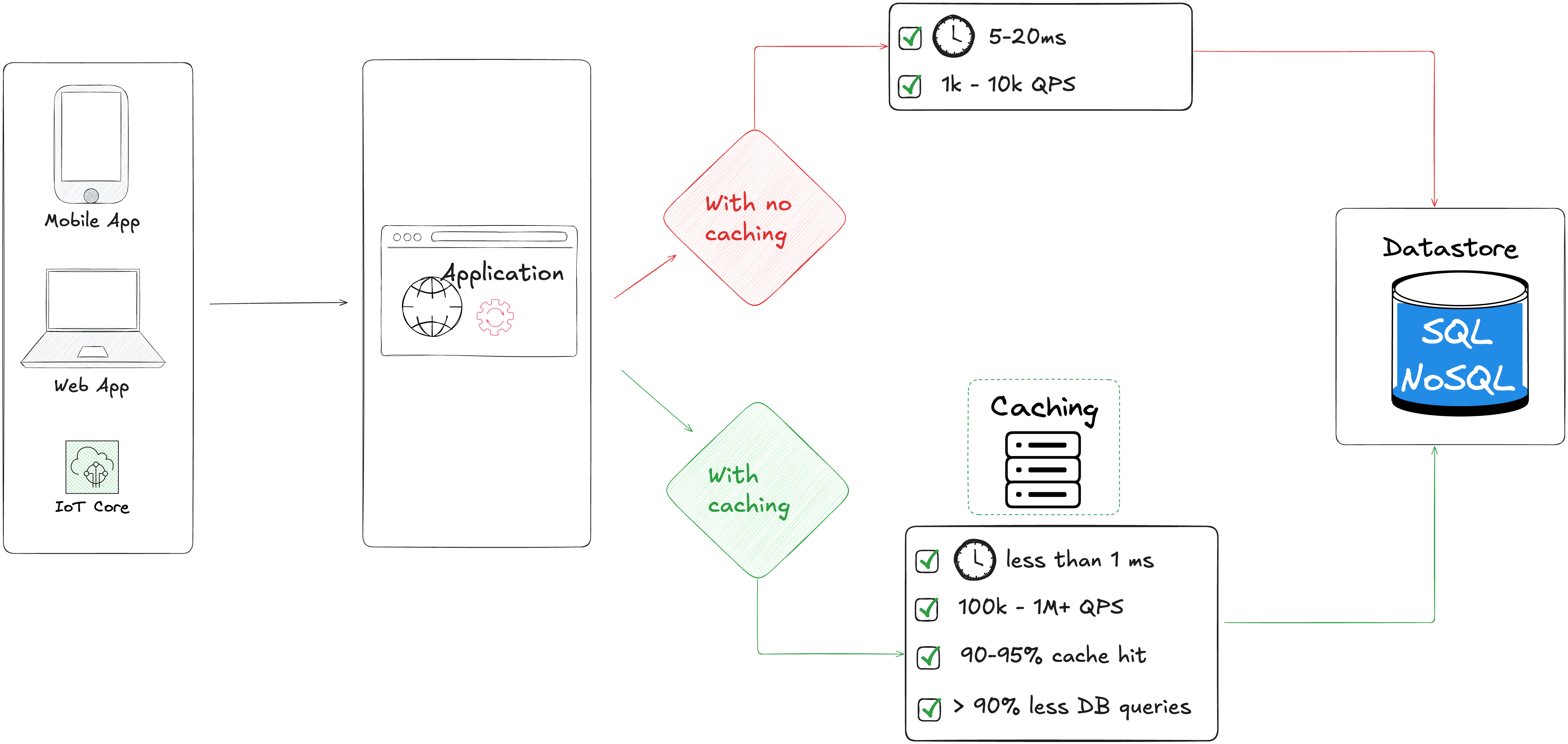

Caching significantly improves the performance, as illustrated in the following diagram, you can see the difference between an implementation with caching and with no caching. You can handle more queries per second (QPS), the latency can be lower than 1ms, the cache hit ratio can go up to 95%, dropping the number of queries addressed to the database.

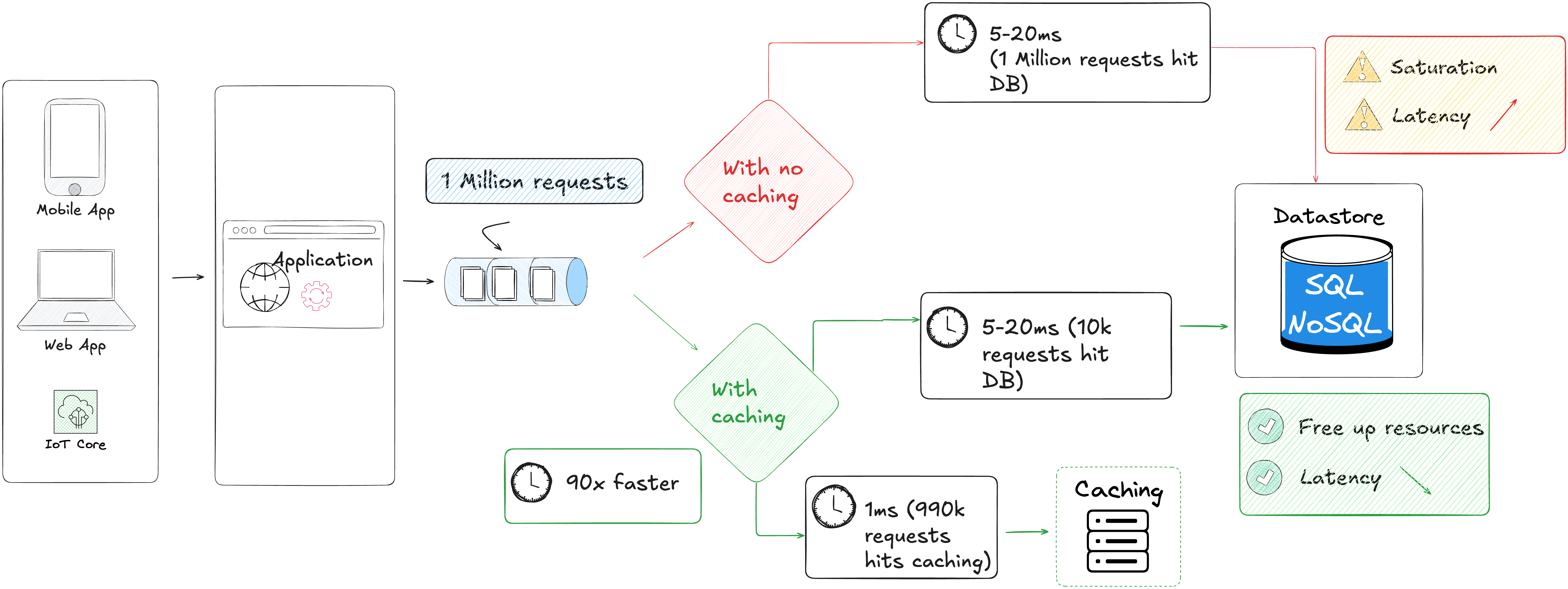

Here is an example with a 1 million requests:

With no caching : 100% of the requests hit the database, if the database latency is 5-20ms, it's 1 million requests x (5-20ms).

With caching : 1% of the requests hit the database, 99% hit caching (with 1ms latency), it's 90x faster responses.

How can you monitor the activity of your caching service

Your caching service as all services can also be under pressure, heavy load, it's important to monitor how the service behaves and which metrics can help you understand and alert if threshold are met.

| Monitoring Objectives | Description | Core metrics to collect | Alerts and thresholds |

|---|---|---|---|

| Availability & Health |

Is the cache node up and reachable? Are all replicas in sync? |

redis_up / memcached_up connected_clients role (master/slave) |

redis_up == 0 (Node down) connected_clients = 0 (No clients) |

| Performance |

Is the cache responding quickly? Are commands or connections getting slow? |

latency (avg, p95, p99) commands_processed_total instantaneous_ops_per_sec |

latency > 5ms (Redis) ops_per_sec drop > 30% baseline |

| Cache Hit Ratio |

How effective is the cache at serving hits vs misses? Low hit ratio may indicate poor caching strategy. |

keyspace_hits keyspace_misses hit_ratio = hits / (hits + misses) |

hit_ratio < 0.8 (underperforming cache) |

| Resource Utilization |

Is the cache using memory efficiently? Are evictions increasing? |

used_memory maxmemory evicted_keys expired_keys |

used_memory > 85% of maxmemory evicted_keys increasing > baseline |

| Errors & Faults |

Are there connection drops or command errors? Any replication or cluster failovers? |

rejected_connections sync_full master_link_status |

rejected_connections > 0 master_link_status != "up" |

| Security & Access |

Are there unauthorized connection attempts? Is authentication being enforced? |

authentication_errors client_connections ACL log entries |

authentication_errors > 0 sudden connection spikes > 200% baseline |

Real-world use cases

Real-world use cases where implementing caching strategies improved performance with cost savings.

| Company | Their use cases with Redis | Key metrics | Benefits | Sources |

|---|---|---|---|---|

| Uber | use Redis cache layer to serve important read volume with low latency |

Requests: ~40Millions reads/sec Hit rate: ~99% |

Large resources and cost savings |

More details |

| Ulta Beauty | Used Redis Cloud as the in-memory layer for inventory, carts and personalization to scale e-commerce |

Requests / perf: inventory calls went from ~2 seconds → ~1 millisecond (99% reduction in inventory call time) |

Business impact: 40% increase in revenue generation |

More details |

Real-world use cases where lack of caching strategies caused revenue loss

| Company | Incident Description | Revenue / Impact | Root Cause / Lessons | Sources |

|---|---|---|---|---|

| Shopify (2020) | High-traffic sale event caused DB bottlenecks for checkout and inventory queries. | Millions in lost sales over peak periods due to abandoned carts. | Insufficient caching layer for high-volume reads; over-reliance on direct DB queries. | More details |

| Ticketmaster (2021) | Inadequate caching of session and availability data caused site slowdowns during major ticket sales. | Hundreds of thousands to millions in lost ticket sales per high-demand event. | Backend queries overloaded DB; no CDN or caching strategy to absorb read spikes. | More details |

| Fortnite / Epic Games (2020–2021) | Massive in-game event traffic caused APIs for inventory and player stats to hit DBs directly. | Microtransaction and in-game purchase delays; millions in potential lost revenue. | Overreliance on DB reads without caching layers for real-time game state. | More details |

| Instacart (2020–2021) | Grocery order volume spikes caused DB latency and failed checkouts due to lack of caching. | Millions in potential sales lost during peak pandemic shopping periods. | High read traffic hit databases directly; caching not optimized for fast-changing inventory/pricing. | More details |

| Zoom (2020) | Rapid surge in meeting/user metadata queries caused backend DB slowdown, affecting session joins and dashboards. | User experience issues; potential churn and delayed enterprise adoption; loss of new clients. | Inadequate caching of session/user metadata at scale; direct DB queries caused bottlenecks. | More details |